(Cross-posted to MoneyLaw)

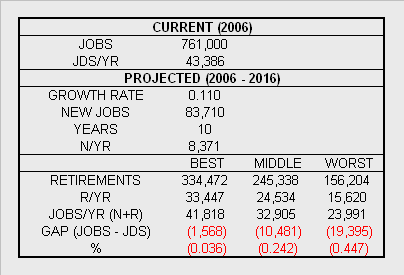

It's not news that the job market is tough for new lawyers right now. But how bad is it? And how bad will it get? Lets look at some recent figures. According to the U.S. Department of Labor, Bureau of Labor Statistics, there were 761,000 lawyers working in America in 2006. The BLS projects that this number will grow by 11% over the decade from 2006 to 2016. That means that we should have about 83,710 new jobs for attorneys by 2016, or an average of about 8,371 new jobs per year.

In order to guess at the overall employment outlook for new lawyers, we have to guess at the number that might retire as well. Retirements should, in theory at least, create more jobs for new attorneys. The ratio won't be one to one, because law firms hire and fire new associates based on overall market conditions, not based on the number of partners retiring or senior associates promoted to partner each year. But to keep this simple we'll just assume that one retirement equals more or less one opening.

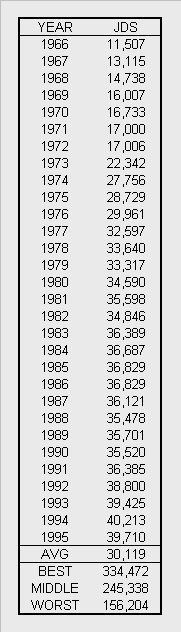

So lets look at some history (PDF). The first table here shows the number of JDs awarded by ABA approved law schools for the years 1966 through 1995:

It seems reasonable to assume that lawyers who graduated somewhere in this time frame will make up most of those retiring over the 2006 - 2016 decade. Those called to the bar in 1966 had worked for 40 years at the start of the period, and those admitted in 1986 had worked for 20. The numbers marked as BEST and WORST seem like the largest and smallest numbers of attorneys that might retire in the near future. The BEST case assumes that retirees come mainly from the largest cohort of those admitted from 1986 to 1995. The WORST case assumes that retirees are mainly those from the 1966 to 1975 period. The MIDDLE case is the average of these two.

The table below shows the results of adding estimated retirees and average new jobs to figure BEST, MIDDLE, and WORST cases for the number of jobs per year available to new attorneys. From these figures, it seems that in the best case we might have jobs for 96% of new JDs over the next decade. But the middle or worst cases would leave 25 - 45% of new lawyers out of work each year.

The projected number of new JDS per year comes from the next table, which shows the number of degrees awarded in 2006 by all ABA approved law schools. This table also shows the JMRI score for each school. Schools are sorted in descending order of US News Combined Reputation Score. The JMRI is a measure of the proportion of the total openings for new lawyers each year that could be filled by a given school's graduates and the graduates of all schools higher up in the listing. For example, the formula for Stanford is:

JMRI = JDS(Stanford + Yale + Harvard) / JOBS(MIDDLE) * 1,000

So the classes of Stanford and the two schools above it could fill 2.9% of available jobs each year -- as projected in the MIDDLE case -- resulting in a JMRI score of 29. The BEST and WORST columns use a similar formula but give alternative measures for those cases. A JMRI score of 1,000 or higher may be cause for some concern.

This is only a crude metric because of course the job market doesn't fill from top to bottom in strict reputation score order. Law firms tend to hire from a certain number of national schools and then from local schools in their region -- not indiscriminately from all schools in the country. Larger firms tend to hire more graduates from national schools, and smaller firms do more of their hiring locally. Also, students at a "riskier" school with very high grades and prior work experience in high demand may have much better prospects than graduates with no such assets at a less "risky" school. So the JMRI score is just a relative estimate of the overall risk of not finding a job for graduates of different schools -- not an absolute predictor of the outlook for lawyers from any one school.

Of course, the cases all assume job growth for new lawyers will at least match BLS projections. Given the current rumors of mass layoffs, mergers, and even some firms folding, that may or may not happen. The new JD figures per year also do not include graduates from recently approved schools at Charlotte, Elon, and Drexel ... or UC Irvine ... or the ten new law schools now under proposal or development. They also include no graduates from the Peking University Law School, which according to Bill Henderson also has plans to seek ABA accreditation.